One of the most common scenarios I experience in Splunk is where I need to use data from two different indexes at once—typically in order to build management and reporting dashboards. With my background in developing applications on relational databases, my first attempts at this solution used the “join” command in Splunk. Once I realized that a combination of the “append” and “stats” commands can be a better choice, I started using those more. But today I will show an even better, faster approach!

Watching cartoons as a kid, I remember Bugs Bunny asking “one lump or two?” to Pete Puma. Bugs wound up giving poor Pete lots of lumps. The analogy here is that searches are lumps, we Splunk admins are Bugs Bunny, and Splunk is Pete Puma… We don’t want to give Splunk any lumps, ok? :)

Instead of using the “append” command at all, I increased my search query performance by an order of magnitude (10x) simply by avoiding the “append” command. My searches also turned out to be much more accurate, as well!

Old way:

index=foo earliest=-30d latest=now | append [search index=bar earliest=-30d latest=now] | bin _time span=1d | stats count by index

New way (faster!):

(index=foo) OR (index=bar) earliest=-30d latest=now | bin _time span=1d | stats count by index

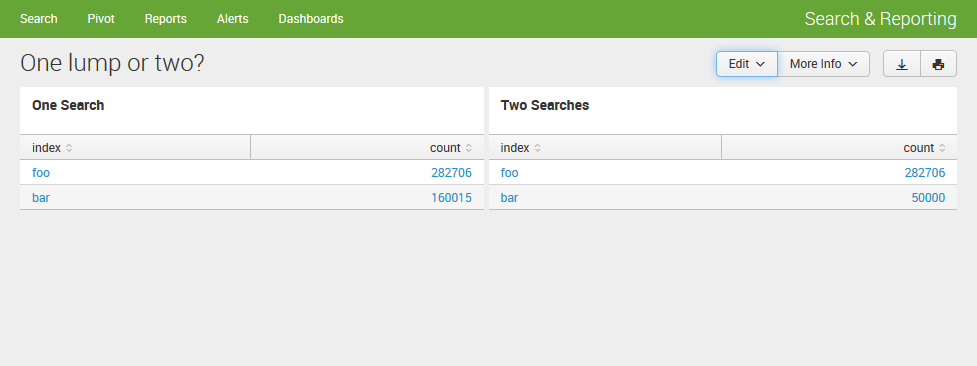

Check out this dashboard screenshot  to see the difference between these two searches.

to see the difference between these two searches.

Problems with “append” and “join”

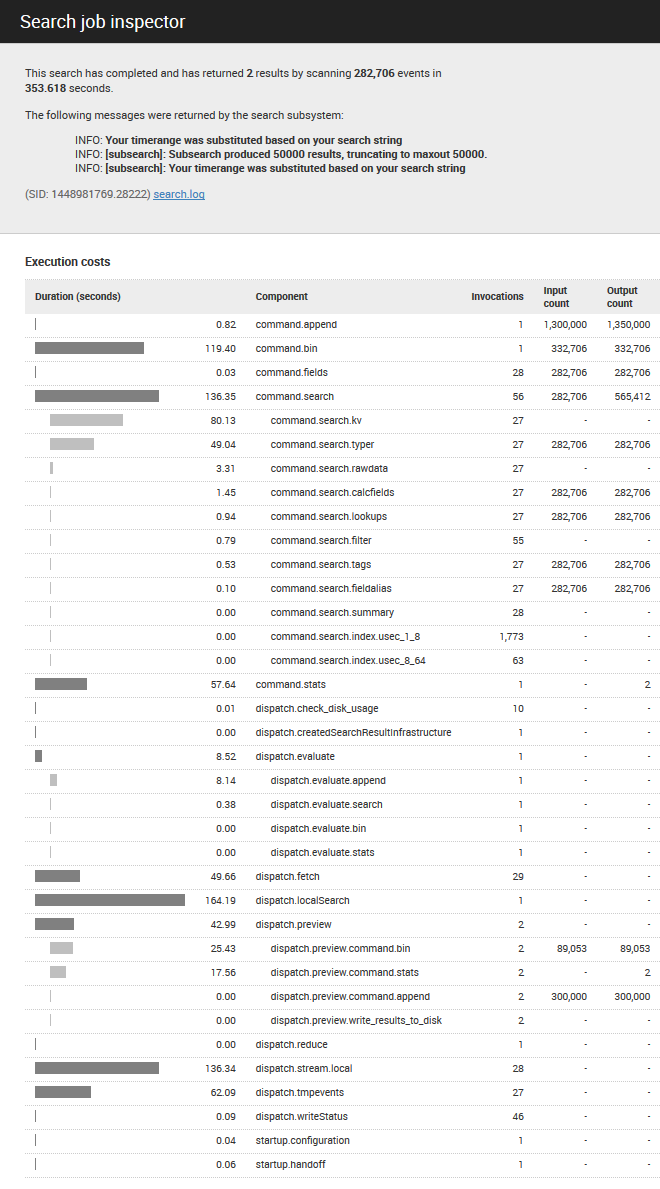

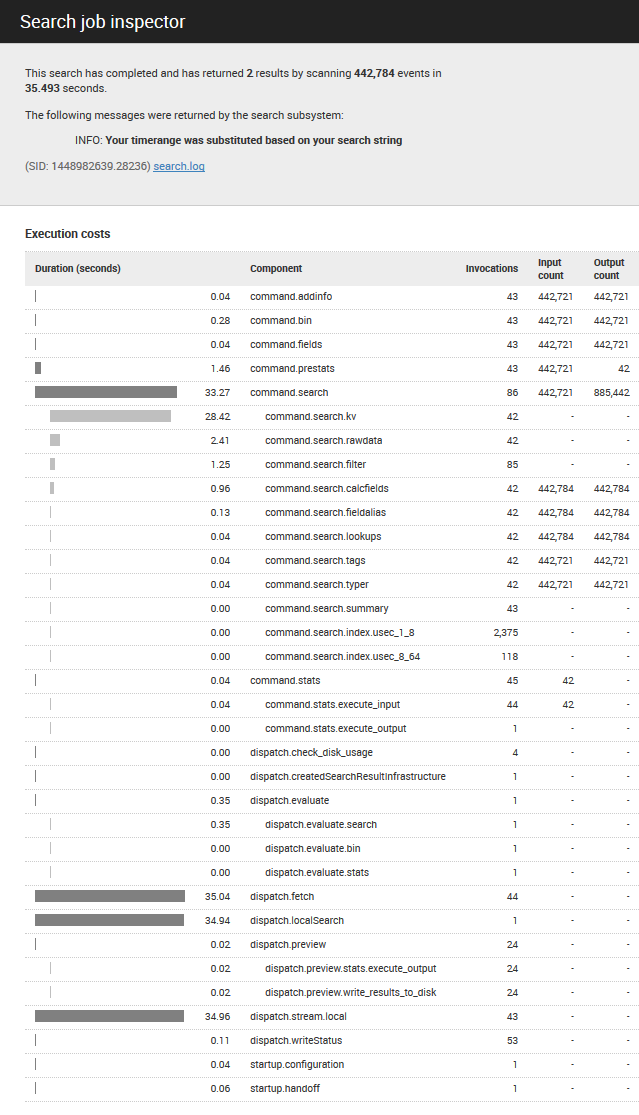

- Time. Take a look at the attached search inspector results for the old search vs. the new search. That’s a huge increase in performance!

- Accuracy. Because append and join are both computationally expensive operations, Splunk wisely limits the events return from these commands to 50,000 by default. Sure, I could have increased these limits, but I figured the limits served a useful purpose, and I did not want to change them without a good reason.

- Cost. Because my searches typically power dashboards with timespan tokens, I have to remember to add the tokens to these sub-searches. This adds one more place for human error (bugs affect the business’s bottom line), or at least more code that needs to be refactored as the dashboard changes over time (labor is the largest expense to a business).